Leaving Haskell behind

For almost a complete decade—starting with discovering Haskell in about 2009 and right up until switching to a job where I used primarily Ruby and C++ in about 2019—I would have called myself first and foremost a Haskell programmer.

Not necessarily a dogmatic Haskeller! I was—and still am—proudly a polyglot who bounces between languages depending on the needs of the project. However, Haskell was my default language for new projects, and in the absence of strongly compelling reasons to use other languages I would push for Haskell. I used it for command-line tools, for web services, for graphical applications, for little scripts…

At this point, though, I think of my Haskell days as effectively behind me. I haven't aggressively sworn it off—this isn't a “Haskell is dead to me!” piece—but it's no longer my default language even for personal projects, and I certainly wouldn't deliberately seek out “a job in Haskell” in the same way I once did.

So, I wanted to talk about why I fell away from Haskell. I should say up front: this is a piece about why I left Haskell, and not about why you should. I don't think people are wrong for using Haskell, or that Haskell is bad. In fact, if I've written this piece the way I hope to write it, I would hope that people read it and come away with a desire to maybe learn Haskell themselves!

What drew me to Haskell?

The absolute biggest pull for me, when first coming to Haskell, wasn't monads or DSLs or even types. The biggest pull was the ability to reason about code symbolically and algebraically.

Yeah, I know, that might sound like some pretentious nonsense. Bear with me.

The idea here is that certain transformations in Haskell are always correct, which allows you to reason about code in a mechanical but powerful way. A simple example: in Haskell, we can look at a function call and replace it by the body of that function. Say, if we have a function double n = n + n, and we call it with some expression x, we can replace double x with x + x no matter what x is.

This property isn't true in most other programming languages! For example, in many imperative languages, this transformation will fail if we call double with i++ for some variable i: the reason is that i++ modifies the value of i, so double(i++) (which increments i once) will of course produce a different value than (i++) + (i++) (which increments i twice.)

“So what?” you might ask, and that's fair. However, this starts to be very appealing in that certain ways of changing your code are always going to be mechanically correct. This is unbelievably liberating. There's a kind of fearless refactoring that it enables, where certain mechanical transformations, each easily verified as correct, can be stacked on top of each other to do wild but ultimately safe changes to your code overall. Doing large refactorings in, say, a Ruby codebase can be deeply harrowing, while doing large refactorings in a Haskell codebase can be a complete breeze.

Or, as the mathematician Hermann Weyl once said:

We now come to the decisive step of mathematical abstraction: we forget what the symbols stand for. ...[The mathematician] need not be idle; there are many operations which he may carry out with these symbols, without ever having to look at the things they stand for.”

This same approach—forgetting what the code means and yet still being able to transform it in productive and powerful ways—is possible with Haskell more than with any other language I've used1.

Of course, the other big pull for Haskell is the type system. There's a lot to be said about the totality of modern Haskell's type system, but the core Haskell language strikes a spectacular balance between having a strict type system without it being too noisy or restrictive. Type inference means that most types are implicit in the code, making the process of writing types significantly less onerous than in something like Java, and the flexibility and convenience of typeclasses means that even when you need to think about the types, they're often not too fussy (compared to, say, OCaml's requirement that you use different versions of the arithmetic operators for integers and floats.) At the same time, the fact that the type system can sometimes get in your way is part of the reason for using Haskell.

I would describe good Haskell code as “brittle”, and I mean that as a compliment. People tend to casually use “brittle” to mean “prone to breakage”, but in materials science what “brittle” means is that something breaks without bending: when a brittle material reaches the limits of its strength, it fractures instead of deforming. Haskell is a language where abstractions do not “bend” (or permit invalid programs) but rather “break” (fail to compile) in the face of problems.

And that's often what you want! For example, Haskell has a NonEmpty type which represents a list which is guaranteed to contain at least one element. Operations on NonEmpty which preserve the same length (like modifying each element with map) or which are guaranteed to add to the length (like combining two NonEmpty lists with append) will return NonEmpty. Other operations which reduce the number of elements potentially to zero, like using filter to remove items that match a predicate, will return a plain list, since there's no guarantee they will be non-empty! In other programming languages, you might informally say, “I know that this thing will always have at least one element,” but in Haskell, it is idiomatic to express this directly in the type system and have the compiler double-check your logic.

So if you have a program where you need to supply, say, a NonEmpty list of file paths to examine, then you can't just pass the command-line args to this function directly, because those might be empty: you must check that they're not empty first and handle the empty case accordingly. If I later on add a filtering step, only keeping the files with a relevant file extension, then I must check for an empty resulting list, because I literally cannot accidentally pass an empty list to the function. This program is “brittle” because it can fail to compile in the face of changes which aren't safe, which is incredibly powerful2.

Over time, writing Haskell means you start building programs in a way that maintains program invariants using types so that the compiler can double-check them. Sometimes people take that to mean stuff like type-level computation, but “express invariants using types” can be much simpler. It can mean something as simple as wrapper types for strings to represent whether they've been SQL-escaped, so that your web API gives you a RawString but your database abstraction only accepts SanitizedString and you can't accidentally introduce a code path which forgets to sanitize it. It can mean converting a surface representation filled with Maybe fields and turning it to an internal representation where information is guaranteed to exist. It can mean something being just generic enough to test in isolation.

And Haskell's other strength is that the language itself is malleable and powerful, which enables terse domain-specific languages without things like macros. Some of the code I'm the proudest of in Haskell has been simple domain specific languages: an example is my config-ini library, a library for working with INI configuration files. Instead of setting up an imperative interface to convey how to parse an INI file into your application-specific configuration, you set up a declarative interface that maps specific parts of your configuration type (via lenses) to specific parts of the structure of an INI file, which in turn lets you use that interface to do reading, writing, and diff-minimal update3. This is accomplished with a simple monadic DSL:

configParser :: IniSpec Config ()

configParser = do

section "NETWORK" $ do

cfHost .= field "host" string

cfPort .= field "port" number

section "LOCAL" $ do

cfUser .=? field "user"

DSLs aren't the right choice for everything, but they can be a powerful tool when applied correctly, and Haskell also minimizes the amount of “magic” necessary to make them work. Unlike the elaborate dynamic metaprogramming which powers DSLs in something like Ruby, the features which power DSLs in Haskell are often just flexible syntax and the ability to overload things like monads. Some Ruby DSLs are “infectious”, since you need to do global monkeypatching to enable constructs like 2.days.ago, but Haskell DSLs are often easy to scope to specific files or areas of code and can be clean in their implementation.

Finally, I think a related but understated strength of Haskell is just how natural it makes working with higher-order functions. This is partly syntax, partly semantics, and partly a good synergy between the two. I don't want to overstate the importance of syntax, but I think the fact that you can write (+) in Haskell and that means “a function which takes two numeric arguments and adds them” lets you gravitate towards that rather than other constructs. What's a function to pairwise multiply two lists in Haskell? It's simple:

pairwiseProduct = zipWith (*)

What's the equivalent in Ruby, a language which does have blocks and often permits some aggressive code golfing? It's still terser than, say, Java, but still much less so than the Haskell:

# assuming at least Ruby 2.7 for the block syntax

def pairwise_product(xs, ys)

xs.zip(ys).map {_1*_2}

end

I once heard it said that Haskell lets you work with functions the way Perl lets you work with strings. Lots of Haskell idioms, like monads, are perfectly expressible in other languages: Haskell just makes them feel natural, while writing a monad in many other languages feels like you have to do lots of busy-work.

What pushed me away from Haskell?

If I had to choose the three big factors that contributed to my gradual loss of interest in Haskell, they were these:

- the stylistic neophilia that celebrates esoteric code but makes maintenance a chore

- the awkward tooling that makes working with Haskell in a day-to-day sense clunkier

- the constant changes that require sporadic but persistent attention and cause regular breakages

What do I mean by stylistic neophilia here? The Haskell community, as a whole, is constantly experimenting with and building new abstractions. Some of these are borrowed from abstract algebra or category theory, and permit abstract manipulation of various problem domains, while others result from pushing more computation to the type level in order restrict more invalid states and allow the compiler to enforce more specific invariants.

I think these are cool and I'm happy people are doing them! I'm glad that people are experimenting with things like, say, expressing web APIs at the type level or using comonads to express menu systems. These push at the very boundaries of how to express code and address problem domains in radical new ways, bringing unexpected advantages and giving programmers new levels of expressiveness.

I also… don't really want to deal with them on a day-to-day basis. My personal experience has been that very often these sort-of-experimental approaches, while solving some issues, tend to cause many more issues than is apparent at first. An experience I've had several times throughout my Haskell-writing days—both in personal and professional code—is that we'll start with a fancy envelope-pushing approach, see some early advantages, and then eventually tear it out once we discover that the disadvantages have grown large enough that the approach was a net drag on our productivity.

A good concrete example here is a compiler project I was involved in where our first implementation had AST nodes which used a type parameter to represent their expression types: in effect, this made it impossible to produce a syntax tree with a type error, because if we attempted this, our compiler itself wouldn't compile. This approach did catch a few bugs as we were first writing the compiler! It also made many optimization passes into labyrinthine messes whenever they didn't strictly adhere to the typing discipline that we wanted: masses of casts and lots of work attempting to appease the compiler for what should have been simple rewrites. In that project, we eventually removed the type parameter from the AST, because we'd probably have run out of budget if we finished the compiler and appeased GHC every time we tried to write an optimization.

This wasn't an isolated incident: I'd say that in three-quarters of the projects I worked on where we tried a “fancy types” approach, we ended up finding them not worth it. It's also not just me: the entire Simple Haskell movement4 is predicated on the idea that you get the most benefits out of the language by eschewing the fancier experimental features and sticking to minimal extensions.

And yet, fancier type features are pervasive in the broader community. New libraries are often designed around the fancier features, and there's even a cottage industry of alternative takes on the standard library that try to embed more complicated type features directly into the basic operations of the language. This also informs the direction of the language: you start getting into linear types and even a Haskell-ey take on dependent types, and then those start creeping into libraries you might use, as well.

It can also be an uphill battle to hold the line against these fancier type explorations! As my friend and fellow Haskell programmer Trevor once said, “The road to hell is paved with well-intentioned GADT usage.” Many of these fancily-typed constructs are appealing and do bring some advantages, and many of the disadvantages are delayed. Often, these features make things difficult to change or maintain, which means it can be weeks or months or even years before the drawbacks become fully apparent. And on various occasions, I've replaced complicatedly-typed abstractions with much simplified versions, only to see subsequent programmers notice the lack of fancy types5 and try to replace those simple abstractions with various kinds of cutting-edge type-level abstractions, only to see the code blow up in size, drop in performance, and lose much of its readability.

As I said before, I don't fault people for exploring these idioms, and it's not impossible to find examples that do end up pulling their weight. (Using data structures indexed by compiler phase is a good example of a “fancy type-level feature” that I've found remarkably useful in the past6.) But keeping up with all of it is alienating and exhausting, and at some point, it wasn't a stretch for me to look at the celebration of type-level exploration and the amount of work it took to keep it away from the code I was writing and consequently think, “Do I really belong here?”

The awkward tooling is something that I think is sort of obvious if you've tried writing Haskell for any length of time. We've got multiple standard-ish build systems including Cabal and Stack (and I'm led to believe that rules_haskell for Bazel is decent now although I haven't tried it), as well as linters like hlint, editor integrations like Dante or the Haskell Language Server, autoformatters like brittany or ormolu…

All these tools are, well, fine, or at least fine-ish. They usually do what they need to. I am quite confident I have never loved any of them: at best, they did what they needed to do with minimal hassle (which has been my experience with, for example, ormolu) and at worst they've had constant bugs and required regular workarounds but more or less got the job done. It's quite possible that things have changed drastically since I was more involved in Haskell, but during the decade that I was, tooling certainly improved7 but never really shined.

A big problem is the perpetual Not-Invented-Here where people constantly try to build the newest, best thing. This isn't at all unique to Haskell—in fact, complaints about Haskell build systems look petty next to Python's Hieronymous Bosch-esque ecosystem of build tools and environment managers—but it's still frustrating to see people constantly trying to reinvent every little detail (down to the textual format for Haskell packages, one thing I'm reasonably convinced they got perfectly right8) and then leaving it about 95% finished for years.

And if I'm spending my working day with a language, I want the tooling to be great. I want it to be something I can celebrate, something I can brag about. I think Rust's cargo is such a tool: I regularly find things about it that are great, and add-ons that make it even better. At least as of 2019 when I was last writing Haskell, there was no Haskell tool that came even close to Cargo's ease-of-use and stability.

Again, I don't think Haskell tools are abysmal, they're just… fine. And at this point, I think my bar for tools has gotten higher than “just fine”.

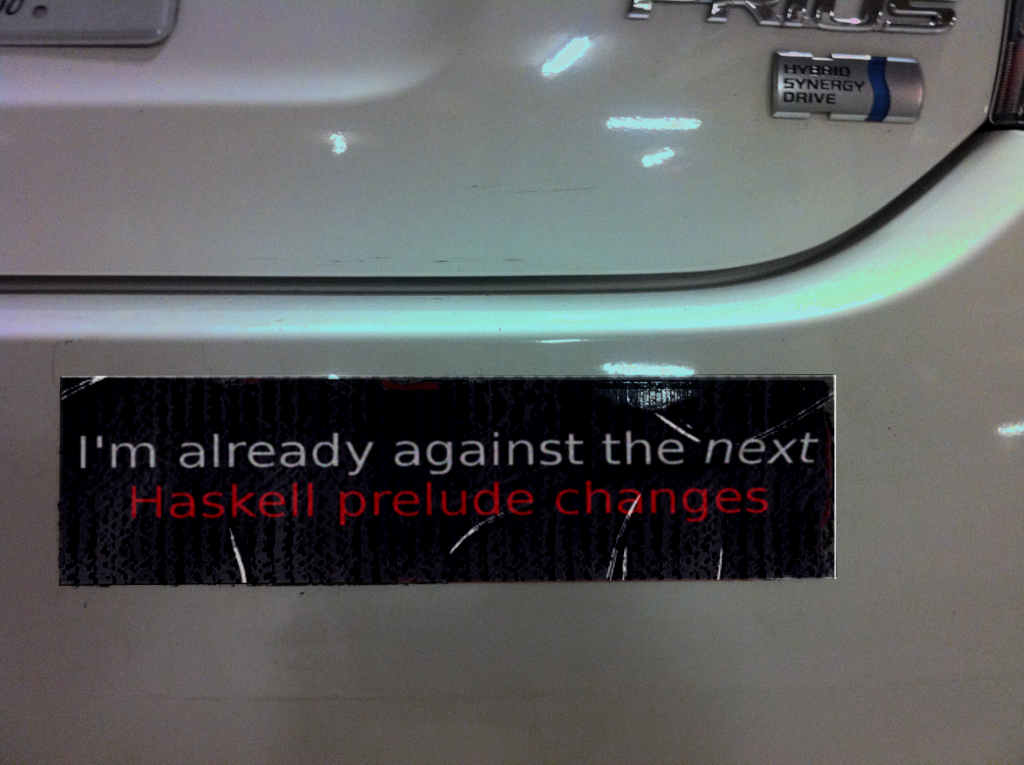

Finally, I mentioned the constant changes, by which I mean the way that the Haskell language itself undergoes regular backwards-incompatible revisions. This has been going for a long time: I mean, just look at this joke I tweeted back in 2015, in response to the Foldable-Traversable Prelude changes, sometimes at the time also called the “Burning Bridges Proposal”.

— getty (@aisamanra), 2015-10-15

The way that Haskell-the-language evolves—well, the way that GHC evolves, which is de facto Haskell since it's the only reasonable public9 implementation—is that it gradually moves to correct its past missteps and inconsistencies even in pretty fundamental parts of the language or standard libraries. This is in many ways good: there's an attitude in some communities that past mistakes are unfortunate but we need to live with them. People in the Haskell community often reject this idea: why not improve things? Why not fix what we see as clunky mistakes in the standard library? These changes move the foundations of Haskell towards a cleaner and more consistent set of primitives, making it a better language overall.

But oh my god they're annoying.

Very few of the changes are particularly onerous—indeed, they often are accompanied by analyses which show that only such-and-such a fraction of Hackage projects will be affected by them—but they all amount to persistent friction. If I grab an old Haskell project, even one without any external dependencies, I can often safely assume that it won't build any more with any recent version of GHC because everything has been changed just a little tiny bit. The fixes are often tiny, but they add up. Things are always just a little different than they used to be, just different enough that they require your attention: adding a superclass there, removing an import there, adding a type signature since this thing turned just polymorphic enough that the code is ambiguous…

And you know what? It doesn't have to be like this. Look at the way Rust does updates with epochs. The Rust language explicitly builds in contracts of backwards-compatibility: if you use things that are stable, then they won't intentionally break. Intentional breaking changes are hidden behind epochs. I've got old Rust projects which I can return to and continue to build and develop without similar tedium from the language itself.

I think there's a common thread between these three things I mentioned: none of them, especially in isolation, are so painful that you can't just deal with them. You can write Haskell without fancy type features, evaluating the worthwhile ones and holding the line against the costly ones. You can use Haskell's perfectly alright tooling if you don't mind the occasional bug or missing feature or clunky workflow. You can follow compiler changelogs and do sporadic codebase updates every other release cycle. They're bumps in the road, but you've still got a road: if the destination is worth it, what's a few bumps?

I still think Haskell is a great language. The only thing that changed is my tolerance for the bumps.

What do I still miss from Haskell?

All the stuff I said about Haskell above? All that still holds and I genuinely think it's true. I miss being able to write and refactor code algebraically: even when writing Rust, a language which I very much like and which has a lot of the same strengths as Haskell, I miss the ability to do small mechanical refactorings and know that I'm maintaining the meaning of the program.10

And the type-system, too! It's true that other languages have developed more sophisticated type systems—mainstream languages in 2023 have a lot more you can do with static types than they did in 2009—but Haskell's type system still has features that others typically lack, even without the whole menagerie of extensions: abstracting over higher-kinded types is an obvious example here.

The Haskell library ecosystem is a real mixed bag—from the typical half-maintained churn of open source libraries to the weird little kingdoms of individual authors trying to reinvent the universe in their own image to the entire tedious gamut of sadly-necessary-but-all-different string-like types—but there are some pretty spectacular libraries and abstractions in Haskell that are only half-possible in other languages. Lenses, for example, are really cool in a way that's hard to grasp if you haven't used them much. I look forward to seeing them poorly half-implemented in mainstream languages in the 2030s.

Haskell libraries also are often declarative by necessity, but those APIs end up being a pleasure to use: a favorite example here is the Brick terminal UI library, whose simple declarative building blocks end up producing by far the best TUI library I've ever used, but the diagram-creation library diagrams or the music-writing library music-suite are other great examples. In these cases, there's often nothing preventing similar libraries from existing in other languages, but they tend to get built in Haskell first by pure functional necessity.

A thing that seems small, but which I miss a ton, is do-notation. Or, well, I don't care as much about do notation specifically as “any notation which lets you add more bindings without adding indentation for each level of binding”, which Haskell lets you do with both do-notation and with terse lambda syntax and optional operators. There are many abstractions—monadic and not—where nesting lambdas would be a convenient way of expressing APIs, but in most languages nesting callbacks like this ends up being a big ugly hassle of rightward drift. Consider this Ruby usage of flat_map to come up with a list of possible ice cream servings, where each additional axis adds yet another layer of indentation:

def all_flavors =

[:vanilla, :chocolate, :strawberry].flat_map do |flavor|

[:cone, :cup].flat_map do |container|

[:sprinkles, :nuts, :fudge].flat_map do |topping|

["a #{container} of #{flavor} ice cream with #{topping} on top"]

end

end

end

The equivalent Haskell here?

allFlavors :: [String]

allFlavors = do

flavor <- ["vanilla", "chocolate", "strawberry"]

container <- ["cone", "cup"]

topping <- ["sprinkles", "nuts", "fudge"]

pure ("a " <> container <> " of " <> flavor <>

" ice cream with " <> topping <> "on top")

…yes, I know this is a cartoon example. However, there are plenty of places where having Haskell's sugar can be incredibly powerful. You can, for example, build a convenient and good-looking library for async/await-style structured concurrency on top of do-notation by yourself in an afternoon, while most other mainstream languages had to deal with vicious bikeshedding for years before finally coming up with a halfway-usable async/await syntax11, to say nothing of the ability to trivially embed concurrent transactions or probabilistic programs in Haskell code using the exact same lightweight sugar.

So when I say say that I've fallen out of love with Haskell, it is definitely not because there's nothing in Haskell to love!

So should I use Haskell or not?

Regardless of what programming language you're talking about, there is always a single correct response to the question, “Should I learn or use this programming language?” And that answer is, “It ultimately depends on your goals.”

Are you trying to become a better programmer in general? Then yes, absolutely, learn Haskell! I think this article should make it clear that Haskell is a fascinating and powerful language, and I think the learning experience is more than worth it. Haskell made me a better programmer, and I will continue to think so even if I never write another line of Haskell in my life12.

Are you trying to use it for something? Well, my answer is more restrained, but I don't think the answer is a clear “no”. I think you should be honest about the advantages you get from Haskell—and those advantages are real!—and weigh them against your personal or organizational tolerance for the bumps I've described. I know for a fact that it's not impossible to either individually or as a group get enough benefit out of Haskell that the paper-cuts I've described stay just that: tiny paper-cuts. I know this both because I've worked at such organizations, because I've been such a person, and have many friends who remain Haskell people even as I've drifted away from it.

However, if you pressed me further for a commitment to a yes-or-no answer, my answer would be: no, don't use Haskell. If I were tasked with building an engineering organization, I'd personally stay away from establishing Haskell as the default language, because I know both the value of good tooling and the cost of bad tooling, and I'd rather find a language where I could offer programmers some of the best tooling with a stable language and a clear code ecosystem right away. But even then, I'm not 100% confident in that answer: after all, Haskell does offer some remarkable tools for program safety and correctness, and suffering through poor tooling and linting against unnecessary type families might—maybe, in the right contexts—be worth it for that extra safety13.

It's kind of a bittersweet conclusion! I'm incredibly happy for the time I've spent learning and writing Haskell, and I think the world would be worse if Haskell wasn't in it. And in all honesty, I think part of the value we get out of Haskell is because of—and not in spite of—some of the rough edges above. I'm glad that people experiment with type system features I don't want to use, because with effort and research and time (and no small amount of obtuse error messages) those features will become the powerful type system features of tomorrow that underlie safer, more expressive, more powerful tools and languages! This, after all, is why Haskell's old motto was “Avoid success at all costs.”

It's just not for me any more.

- To be totally fair, the experience of code refactors via algebraic manipulation is still possible in other languages, especially in non-pure functional languages like Scheme or SML where most code is unlikely to have side effects and side effects are more likely to be clearly marked. But Haskell's purity and laziness means that such transformations safer in general.

- Especially over time and across people! You might have read that paragraph and thought, “What's the big deal? I know the method takes a non-empty list: that's not hard to remember!” But the challenge comes when you need to remember these invariants weeks, months, years later, or communicate them to other engineers working on the same code. It's so much more liberating to be able to express your API in such a way that it can't be used incorrectly by anyone!

- What “diff-minimal update” means is that you can supply an existing INI file as well as a new updated structure, and the code will produce a new version of that INI file that retains comments, whitespace, and key ordering, but updates values as needed to correspond to the new in-Haskell structure.

- Okay, full disclosure: I like the idea of this “Simple Haskell Initiative” a lot more than I like its execution. It's all well and good to say, “Don't use the whole language,” but there's only a tiny sliver of advice on what parts to leave behind, and all the people quoted don't actually agree on what subset to use. This “initiative” would be a lot better if it focused on education on how to accomplish tasks with Haskell subsets and rationale for what to drop, instead of the anodyne non-message of, “Don't use every feature!”

- Or, worse, the fact that the simple approach uses

IOsomewhere. I noticed after publishing this article that some people were confused about this point, since I complained about a compiler project which included “fancy types”, so to clarify: the thing I found to be not worth it in the context of compiler development was using a GADT to represent the target language's types in the host language, like this:

data Expr :: * -> * where Lit :: Int -> Expr Int Bool :: Bool -> Expr Bool IsZero :: Expr Int -> Expr Bool IfThenElse :: Expr Bool -> Expr a -> Expr a -> Expr aIn that example, if I try to write a term like

IsZero (Lit 5), it'll accept it fine, but if I try to writeIfThenElse (IsZero (Lit 5)) (Lit 3) (Bool False), Haskell will reject it as ill-typed because the two branches of the if-expression do not have identical types.That's not the same as the “trees that grow” approach I'm referencing here: the only commonality is that they include expressions with a type parameter. Instead, “trees that grow” is about reusing the same AST but gradually adding more information to it. For example, in a compiler, you often start by representing variables as raw strings, and then move to something more like a “symbol” type which can disambiguate shadowed names and perhaps point to the binding site of the variable. The “trees that grow” approach can allow you to

data CompilerPhase = Parsed | Resolved data Expr (phase :: CompilerPhase) = Lam (Name phase) (Expr phase) | App (Expr phase) (Expr phase) | Var (Name phase) data Symbol = Symbol { symName :: String, symIndex :: Int } type family Name (t :: CompilerPhase) :: * type instance Name Parsed = String type instance Name Resolved = SymbolIn this example, I'm reusing the same

Exprtype for all ASTs, but forExpr Parsed, the variable names in that AST are going to just beString, and forExpr Resolved, those variable names will be replaced with a richerSymboltype. There's a lot more you can do with this, but it's a very different approach than the first thing I described.- For context, when I started writing Haskell professionally, we had only had

cabaland sandboxes were brand-new. I'll get on a soapbox for this one for a moment. The intention behind

hpackis good: custom formats can lack tooling or integrations with other software, while moving to a common format like YAML solves that problem. A YAML file will definitely have syntax-highlighting in the way a.cabalfile might not, and you can generate a valid YAML file from an arbitrary programming language where you probably don't also have a library to generate a valid.cabalfile. …but a custom format also gives you the power to express constructs in a simple, clear, domain-specific way that would have to get awkwardly shoehorned into another format (or left as strings which get interpreted in a special way.) A tool likehpackmeans that not only do you have to deal with YAML gotchas like the fact that1.10parses as1.1unless you quote it as a string, you also lose out on simple constructs like Cabal's conditional syntax. Inhpack, you have to write this:when: - condition: flag(fast) then: ghc-options: -O2 else: ghc-options: -O0which is the equivalent of the following snippet of Cabal:

if flag(fast) ghc-options: -O2 else ghc-options: -O0And honestly? I will take the latter over the former any day. I write version numbers and conditional configuration way more often than I have ever needed to produce a

.cabalfile from another piece of software. HPack makes uncommon workflows easier while making common workflows annoying.- Haskell is used by a number of financial institutions and I'm led to understand some of them have their own internal implementations. I don't know the current state of them: I think some of them have moved away from their internal implementations, but I don't know that for sure and was too lazy to research this during the writing of this blog post.

- And I'm not just talking about side-effects! In Rust, it's possible for

f(x)to do something slightly different thanlet y = x; f(y)because of how names affect lifetime heuristics, making this kind of mechanical refactoring a lot more finicky and contextual. - This is not actually a jab at Rust! I do agree that Rust's async story has ended up being kind of messy and complicated, but I think that's understandable, since Rust had a much harder task than almost any other language: implementing a usable and comprehensible

async/awaitmechanism with manageable borrowing rules on top of a systems language that should expose those features with no heap allocation and as little runtime overhead as possible… that was a research problem done in the open, and I don't think it was ever going to produce a perfectly clean elegant core. The fact that it's succeeded in any small part is something I think is impressive, even if the end result has more sharp edges and visible seams than non-async Rust. (And I actually think Rust's postfix.awaitsyntax is a great idea.) - And I will write more Haskell, if for no other reason than that I plan to continue supporting my s-expression library and my INI-parsing library as long as they have users.

- …although in those cases I might gravitate towards Rust, which is at times a more finicky language but has significantly better tooling.