A Taste of Matzo: A Language for Random Text

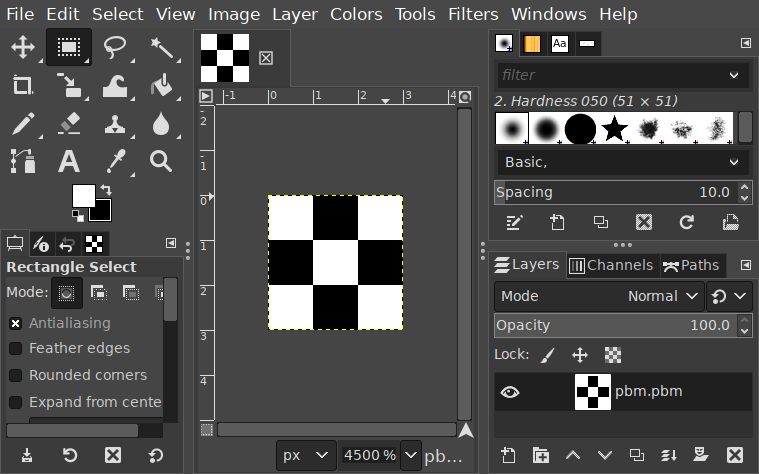

Matzo is a small, experimental, idiosyncratic programming language for creating random text1 which I have in a reasonably usable state at this point. It's still firmly alpha software—and it's a personal project I only work on sporadically, so I'm not making any promises about future stability—but it's usable enough that I feel comfortable at least blogging about it!

If you're familiar with Tracery2, you probably understand the same sorts of things you'd create in Matzo. Matzo aims for use-cases a bit more sophisticated, but only just: it's still a pretty simple tool at its core, as it's designed for small-scale human-directed kinds of text generation.

You can experiment with Matzo in your browser: this is a webassembly-compiled version of the Rust program. It doesn't actually let you save programs, but it'll put the whole program into the URL bar, which means you can more or less “save” programs by bookmarking them. It also has a handful of examples you can look at in the top-right, and it'll track the random seed at the same time.

The rest of this blog post will be a whirlwind tour of the language!

Simple programs

The simplest program in Matzo just outputs some text, which is done with the top-level puts statement. Everything in Matzo is either a definition or a puts to produce output. Statements are separated with semicolons, although the final semicolon is optional.

puts "Hello, world!";

→ run in playgroundThe simplest operation in Matzo is string concatenation, which is so pervasive it's not done with an operator at all: you just put expressions next to each other to concatenate.

puts "Hello" ", " "world" "!";

→ run in playgroundBasic definitions are done with the := operator. We can pull out some of the expressions above and give them intermediate names:

greeting := "Hello";

target := "world";

puts greeting ", " target "!";

→ run in playgroundLet's make this program a tiny bit more interesting, though, and introduce some non-determinism. In Matzo, this is done with the disjunction operator, written as a vertical bar (|) between expressions. Let's make it choose between two possible greetings:

greeting := "Hello" | "Yo";

target := "world";

puts greeting ", " target "!";

→ run in playgroundThis program can now produce two possible pieces of text: either Hello, world! or Yo, world!. In this case, it produces the two with roughly equal probability, but we can change that. One way to modify this is to provide more alternatives: for example, if we want it to produce Hello three times as often as it produces Yo, we can simply repeat Hello three times:

greeting := "Hello" | "Hello" | "Hello" | "Yo";

target := "world";

puts greeting ", " target "!";

→ run in playgroundBecause Matzo will, by default, give each choice within a single disjunction equal weight, this will end up four possibilities with equal weights, three of which happen to be identical: while this program can also produce Hello, world! or Yo, world!, it will produce the former ¾ of the time and the latter ¼ of the time.

But wanting weighted randomness is common enough that Matzo has a shorthand for it! We can use a numeric weight and achieve the same effect, with all unweighted branches being treated as having a numeric weight of 1:

greeting := 3: "Hello" | "Yo";

target := "world";

puts greeting ", " target "!";

→ run in playgroundThere's a bit of a gotcha with disjunction, too: weights are computed for each disjunction without looking inside what the expressions are, whether they're other random names, or literal expressions, or even other parenthesized expressions. This also means parentheses can change the probabilities associated with a disjunction, as everything within the parentheses becomes a single “choice”. Consider the following three statements:

(* example one *)

puts "a" | "b" | "c";

(* example two *)

puts "a" | ("b" | "c");

(* example three *)

puts ("a" | "b") | "c";

→ run in playgroundEach of these lines might print a, b, or c when run—but they do so with different probabilities! The first one will print them all with equal likelihood; the second one will print a half the time, and each other option a quarter of the time; the third will print c half the time, and each other option a quarter of the time. This can be a bit unintuitive: for a lot of mathematical operations, introducing parenthetical grouping like this doesn't have an effect on the outcome3.

If you have several one-word string literals to choose from and you want them to have equal probability, Matzo also has a piece of shorthand up its sleeve: the ::= operator lets you use space-separated bare words and treat them as several string literals to randomly choose between4. We can rewrite our equal-weighted greeting program like this:

greeting ::= Hello Yo;

target ::= world;

puts greeting ", " target "!";

→ run in playgroundIn this case, it doesn't save that much typing, but with many alternatives, it can be much more terse:

(* twelve options with literal-plus-disjunction *)

greekGod :=

"Zeus" | "Hera" | "Poseidon" | "Demeter" |

"Athena" | "Aphrodite" | "Artemis" | "Apollo" |

"Hephaestus" | "Ares" | "Dionysus" | "Hermes";

(* twelve options with the shorthand *)

romanGod ::=

Jupiter Juno Neptune Ceres Minerva Venus

Diana Apollo Vulcan Mars Bacchus Mercury;

→ run in playgroundRandomness and fixed values

The following is a Matzo program that uses the same value more than once:

x := "a" | "b";

puts x x;

→ run in playgroundThis program can print aa, ab, ba, or bb, with equal probability. This demonstrates something important: bound names are re-evaluated each time they're used.

Sometimes, though, this isn't what we want! Imagine that we're building a short prose scene with a randomly-chosen character name: we want to choose a random character name, but for that random name to be consistently used within the text. We can do this with the fix keyword, which is used before a definition:

fix x := "a" | "b";

puts x x;

→ run in playgroundThis program now can only print aa or bb: the reason is that, once x is defined, it'll eagerly choose between its possible random values, and then subsequent uses of x won't be random.

With this, we can write a program like the one described above:

fix strangerName ::= Austin Carter Logan Sage;

puts "You come across your friend " strangerName "!";

puts "You haven't seen them in " ("weeks" | "months" | "years") ".";

puts strangerName " looks " ("pleased" | "thrilled" | "frustrated") " to see you.";

→ run in playgroundFunctions

There are a lot of situations in text generation where you might want simple function-like operations. For example, let's look at this program:

fix color ::= red brown white;

fix animal ::= cat dog rabbit;

puts "You see a " color " " animal ".";

puts "You shout out, \"Hey! " color " " animal "!\""

→ run in playgroundThis will produce output like

You see a white dog.

You shout out, "Hey! white dog!"

The problem, of course, is that the output text here isn't capitalized according to English conventions. You could try to change the structure of the text so you don't need capitalization, but for situations like these, Matzo provides built-in functions to help: in this case, you might use the str/capitalize function:

fix color ::= black brown white;

fix animal ::= cat dog rabbit;

puts "You see a " color " " animal ".";

puts "You shout to it: \"Hey! " str/capitalize[color] " " animal "!\""

→ run in playgroundFunctions are given lower-case names and are called with square brackets. Functions can have more than one argument, separated by commas. A good example of this is the rep function.

Imagine that you're generating random non-English words according to some schema. Often, that'll involve repeating the same syllable some number of times. You could write a program like this:

consonant ::= p t k w h n;

vowel := ("a" | "e" | "i" | "o" | "u") (4: "" | "'");

syllable := 4: consonant vowel | vowel;

word := syllable syllable

| syllable syllable syllable

| syllable syllable syllable syllable

| syllable syllable syllable syllable syllable

;

puts word

→ run in playgroundThis produces simple nonsense words that roughly resemble Hawaiian or another Polynesian language, like piweka'kiwe or tuhau'te. But that definition for word is pretty repetitive! Luckily, for that situation, we can use the rep function, which takes two arguments: the first is an integer number of repetitions, and the second is the expression to repeat. We can refactor the above definition to this:

word := rep[2, syllable]

| rep[3, syllable]

| rep[4, syllable]

| rep[5, syllable]

;

→ run in playgroundBut we can go even further! Most of that structure is the same, so we can fold the randomness in to the first parameter instead:

word := rep[2 | 3 | 4 | 5, syllable];

→ run in playgroundAnd there's one final bit of Matzo shorthand we can use here: if we want to generate integers from a range, we can simply write that range as x..y, and Matzo will randomly choose a number from that range (treated inclusively.) So that definition can become

word := rep[2..5, syllable];

→ run in playgroundA function that deserves special mention is se, the “sentence-ifier” function. You probably noticed before that we occasionally have to do a lot of work to capitalize words and manage spaces and punctuation: in fact, I've found that hands-down the most common bug in producing text from Matzo is getting spacing wrong, like this:

vehicle ::= car bus train bicycle;

puts "I want to take a " vehicle "to London."

→ run in playgroundNotice the problem? This program is missing a space inside a string literal, so it might produce a sentence like I want to take a carto London. In order to automatically handle situations like this, the se function can take any number of arguments and will automatically insert spaces as necessary between arguments, not producing multiple spaces in a row and not putting spaces next to punctuation that doesn't need them, as well as trying to auto-capitalize words at the beginning. That means we can rewrite the above program, with spacing corrected, like this, and se will handle the spaces for us:

vehicle ::= car bus train bicycle;

puts se["I want to take a", vehicle, "to London."];

→ run in playgroundThere are a handful of other built-in functions, including those for working with data types I haven't talked about yet like tuples. But we can also write our own! We do that with the fn keyword. For example, we can define our own naïve pluralizing function like this:

fn plural[word] => word "s";

puts se["I bought four", plural["apple" | "orange" | "pear"], "today."];

→ run in playgroundIn order to make our functions more useful, though, we should talk about…

Pattern-matching

Obviously we can't simply pluralize any English noun by adding an s to the end: English plurals are infamously irregular! Our naïve pluralizing function above will turn feet into feets, focus into focuss, and child into childs. Actually writing a complete pluralization function is going to be pretty hard, but we can do better with a few hard-coded special cases.

Matzo also supports defining a function by cases. To do this, we can put curly braces around the body of the function, with each case being written separately and separated by semicolons:

fn plural {

["foot"] => "feet";

["child"] => "children";

["focus"] => "foci";

[word] => word "s"

};

puts plural["foot"];

→ run in playgroundThis still isn't a complete pluralization function, of course, but now we can call plural["foot"] and it'll give us "feet" instead of "foots".

A reasonably common way that functions get used in Matzo is for generating abstract data and then using that abstract data in different concrete ways. For example, imagine that we're coming up with a random character generator and we want to support a set of gendered pronouns in the output: one way to do this is to choose from our list of supported genders, and use pattern-matching to choose specific gendered pronouns based on that gender:

fix gender := M | F | N;

fn subj {

[M] => "he";

[F] => "she";

[_] => "they"

};

fn obj {

[M] => "him";

[F] => "her";

[_] => "them"

};

puts "While walking, I came across a friend of mine.";

puts se["I hadn't seen", obj[gender], "in so long!"];

puts se[subj[gender], "greeted me warmly."];

→ run in playgroundThere's actually a lot more I could say about pattern-matching—in particular, the way pattern-matching interacts with non-determinism is very subtle and involves some very nuanced trade-offs—but that might have to wait for a future deep-dive blog post, since this one is already quite long!

Other data types

You've already seen numbers. Matzo supports integers and has a few mathematical operations, although because complicated math is relatively rare in the kinds of programs Matzo is intended for, it doesn't support the typical mathematical syntax: you instead have to write add[2, mul[3, 4]] for that kind of thing. You've also seen the range syntax, which can be very useful. In fact, you could replicate a basic dice-rolling program with just puts 1..6;.

You've also technically seen atoms already, but I didn't draw attention to them. Identifiers with capital letters aren't variables, they're just… themselves. If it helps, you can think of them as a shorthand for capitalized strings, since they get printed as themselves (although Foo and "Foo" are not identical for the purposes of pattern-matching.) They're particularly useful for abstract data that doesn't show up in the output directly but drives the output in some way.

Another very useful kind of data is tuples, which are sequences of expressions. They're written with angle brackets and there are a lot of built-in functions for working with them. I like using tuples when creating random words, especially when I want an unusual or flavorful orthography for the words.

Consider Italian orthography, where the letter c before certain vowels is pronounced like the English “k” and before other vowels is pronounced like the English “ch”. If I wanted to produce random words with an orthography like this, I'd approach it by generating the underlying sounds first, with each syllable represented as a tuple, and then process it to build the orthography using helpers like tuple/map and tuple/rep:

(* given a syllable represented as a tuple,

* figure out an Italianiate orthography for it *)

fn syllableToOrthography {

(* a "ch" sound followed by a front vowel

* is written with the bare letter `c` *)

[<"tʃ", "e">] => "ce";

[<"tʃ", "i">] => "ci";

(* a "ch" sound followed by other vowels

* is written with `ci` plus the other vowel *)

[<"tʃ", "a">] => "cia";

[<"tʃ", "o">] => "cio";

[<"tʃ", "u">] => "ciu";

(* a hard "k" sound followed by a front

* vowel is written with `ch` *)

[<"k", "e">] => "che";

[<"k", "i">] => "chi";

(* all other hard "k" sounds are written

* with the letter `c` *)

[<"k", v>] => "c" v;

[<c, v>] => c v;

};

→ run in playgroundFinally, there are also records, which are bundles of data with named fields. I've found these most useful as a mechanism for creating a single conceptual “entity” with multiple random attributes while keeping them together. For example, in the following program, pet is going to represent a random pet, with a random name and a random type:

pet := {

name: "Spot" | "King" | "Skimbleshanks",

type: "cat" | "dog" | "rabbit",

};

fix alicePet := pet;

fix bobPet := pet;

puts se[

"Alice has a", alicePet.type, "named", alicePet.name,

", while", bobPet.name, "is Bob's", bobPet.type, "."

];

puts se[

"Bob's", bobPet.type, "and Alice's", alicePet.type, ("do not" | ""), "get along well."

];

→ run in playgroundNotice how we can create the record with the {field: expression, field: expression, ...} syntax, and can pull out fields using the record.field syntax. We can also pattern-match on them using a syntax similar to the one used to create them.

A bigger example

Well, let's put this together and create a simple fake language! The goal here is to come up with random words with a particular flavor, given both as pronunciations in the International Phonetic Alphabet as well as a more casual transcription in the Latin alphabet. Firstly, let's create our consonants and vowels, represented in IPA, and with some weights to make certain sounds more likely:

cons := "p" | 2: "t" | "k"

| 2: "m" | 3: "n" | 2: "ŋ"

| "s" | "ʃ" | 2: "ɾ";

vowel ::= a i u;Now, we can create a syllable. Each syllable here will be a record, with the various parts of the syllable represented as fields:

syll := {

onset: cons,

nucleus: vowel,

coda: 5: "" | "ʔ",

};Now, a word will be several syllables. Notice the weighting of the number here: it can be a single-syllable word, but most of the time it'll be multiple syllables.

fix word := tuple/rep[1 | 10: (2..5), syll];Why did we fix it? Well, remember that we want to provide two bits of output: the orthographic spelling and the IPA pronunciation, and this means both should be drawing from the same random word, which here we'll just call word.

Producing the IPA orthography is easy enough: since we're using IPA for all the actual sounds, we simply need to pull them out of the record and put them next to each other:

fn syllToIpa [{onset:, nucleus:, coda:}] => onset nucleus coda;Now, we need to process the IPA and come up with a Latin orthography. We can leave the vowels as-is, but we want to turn the IPA consonants into simple ASCII characters, so:

fn consToOrtho {

["ŋ"] => "ng";

["ʃ"] => "sh";

["ɾ"] => "r";

["ʔ"] => "'";

[c] => c;

};With this, we can now turn a syllable to orthography as well:

fn syllToOrtho [{onset:, nucleus:, coda:}] =>

consToOrtho[onset] nucleus consToOrtho[coda];Now, we can finally produce our output. We can use tuple/map to apply these functions to the words, and tuple/join to concatenate them down to a string (with an optional second argument for a separator), and produce our output!

ortho := tuple/join[tuple/map[syllToOrtho, word]];

ipa := "/ˈ" tuple/join[tuple/map[syllToIpa, word], "."] "/";

puts ortho " (pronounced " ipa ")";

→ run in playgroundThis will produced structured output like the following examples:

mishu'ngi (pronounced /ˈmi.ʃuʔ.ŋi/)

ngiri'tana (pronounced /ˈŋi.ɾiʔ.ta.na/)

ku'mi (pronounced /ˈkuʔ.mi/)

What's next for Matzo?

Well, there's still some bugs, some runtime inefficiencies, and some quite frankly bad parse error messages. And in fact, there are a small number of language features I'd like to experiment with! I feel like all the big parts are there and it's eminently usable for a lot of random generation tasks, but it's definitely missing a few convenience things.

There are four bigger things I might try to pursue in Matzo's future, though:

- Firstly, I want to establish a richer standard library. There's a lot of helper functionality I end up recreating regularly, especially stuff to make producing grammatical English easier (e.g. plurals, verb agreement, pronouns, and so forth) and it'd be cool to be able to rely on that stuff when tossing off relatively quick programs.

- Second, I want easier interfaces to external data. It'd be great to have an interface to, say, data sets like Darius Kazemi's

corporaproject or APIs like Wordnik, so that your Matzo programs could pull in data conveniently and use them for other kinds of random generation. - On a similar note, I want Tracery compatibility. I'd like this two different ways: firstly, I think it'd be cool to be able to reference an external Tracery program and incorporate it into a Matzo program, so you could assemble individual Tracery parts into Matzo. Secondly, I think it'd be fun to experiment with converting a subset of Matzo programs to Tracery: not every program can be turned into Tracery (since Tracery is—on purpose!—much more limited in the sorts of “computation-like” things you can express) but Tracery is also much more widely implemented and it'd be nice to take a well-formed subset of Matzo programs and be able to use them with existing Tracery implementations.

- Finally, I'd like to take Matzo and turn the internals into a well-defined and tiny virtual machine. Why? Well, the virtual machine could be small enough that it's easily reimplemented but also easily embeddable in larger programs, at which point it'd be easy to take some Matzo programs and integrate them into, say, a Unity or Unreal Engine game to power dialogue or generation.

I mentioned earlier that I have two other language designs for this task, too, and I'd like to experiment with resurrecting them as well: one of them is a typed language that is otherwise very similar to Matzo, and the other is a logical language based on Answer-Set Programming (which means it ends up resembling the popular Wave Function Collapse algorithm in some respects.) Ideally, if I pursue the virtual machine approach, I'd like the same machine to be a backend to those other languages as well, so you could write any of them and end up with runnable random-text programs.

That's pretty ambitious, and who knows if I'll get around to any of them, much less all of them. For now, though, you can absolutely play with Matzo in your browser or build it yourself. Feel free to reach out to me if you have thoughts, comments, bug reports, feature requests, or anything!

- I've actually created three such languages in the past, but I reimplemented Matzo in Rust a few years back and got it close to “releasable”, while the other two are badly bit-rotted Haskell programs that I have never resuscitated.

If you are familiar with Tracery and want to see an illustrative comparison, here's a simplified example program from the Tracery tutorial:

{ "name": ["Arjun","Yuuma","Darcy"], "animal": ["unicorn","raven","sparrow"], "mood": ["vexed","indignant","impassioned"], "story": ["#hero# traveled with her pet #heroPet#. #hero# was never #mood#, for the #heroPet# was always too #mood#."], "origin": ["#[hero:#name#][heroPet:#animal#]story#"] }and here's an idiomatically equivalent program in Matzo:

fix name ::= Arjun Yuuma Darcy; fix animal ::= unicorn raven sparrow; mood ::= vexed indignant impassioned; puts se[ name, "traveled with her pet", animal, "." ]; puts se[ name, "was never", mood, "for the", animal, "was always", mood, "." ];→ run in playground- The mathematical term for this is associativity: we would say that Matzo's disjunction operator is not associative. That said, when you hear that an operation is “non-associative”, usually, you would expect that the operator has an implicit branching and therefore one parenthesization is identical to the expression without parentheses. The choice Matzo makes here is pretty weird!

- You can also use quoted string literals with

::=if you want, and it'll let you intersperse them with bare words.